S01E12 The superpower of computational modeling

Digital twins, complexity explorables, computational models, F1 & the best of the rest (tech debt, ChatGPT, 10 million pounds of content sludge from New York and New Jersey)

In the last episodes, I wrote about models, and how they can be used to represent reality. Ultimately, we want to use this representation to improve reality. Whether we want to design, optimize, control, simulate, or predict the behavior of a system, conceptual models are just the groundwork. We can increase our leverage over the system through computational models.

In episode 6, I talked about social echo chambers in the context of our increasingly VUCA environment. At the time, did you wonder how one dominant opinion could emerge while others disappear? Or how opinions shift and cluster around certain ideas? Could we create a model to help us understand this system?

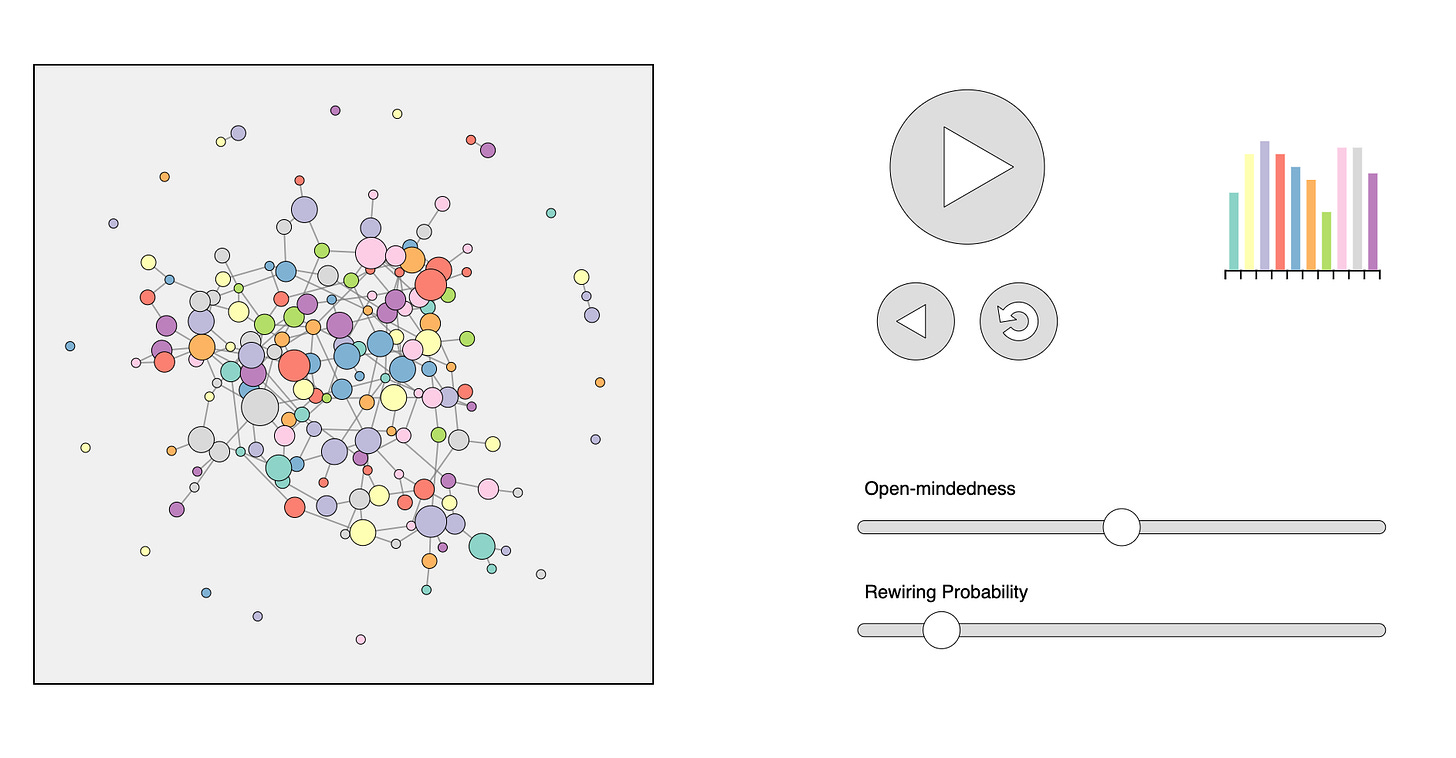

Physicist and complex systems researcher Dirk Brockmann has created an impressive collection of interactive models of complex systems in biology, physics, mathematics, social sciences, epidemiology, ecology, and other fields. One of them illustrates the phenomenon of opinion dynamics:

Unfortunately, I cannot embed the interactive model in this publication, but I encourage you to try it out for yourself on the explorables website. There are no sign-ups - not even a cookie banner!

The model begins with a set number of nodes representing people, and links connecting them. If two people with different opinions are connected, one of them might change their opinion to match the other person's. Alternatively, the link between them might be broken, and one of the people will connect with someone else chosen at random.

The sliders - enabled by computation - let you control the probability that the link will be broken (rewiring), and whether people prefer to connect with like-minded people or not (open-mindedness).

If you set the rewiring probability to high (i.e., you make it more likely to break the link) and openmindedness to low, the group will quickly split into smaller groups with uniform opinions. On the other hand, if you make it less likely to break the link, one opinion will eventually become dominant.

I’m sure some readers are starting to question my promise of writing more actionable content (or even my ability to do so), but there are lessons here that apply to anybody trying to manage a complex adaptive system like a company:

First of all, opinion dynamics play out in companies as well. Companies that encourage a diversity of opinions will learn faster than monocultures. The presence of diverse opinions can help challenge assumptions and beliefs, leading to a deeper understanding of issues and a stronger foundation for decision-making.

Computational models can help us develop an intuition for complex system dynamics. While the dynamics can get technical (e.g., diffusion, fractals & self-similarity, flocking, contagion, network growth…), they can result in very tangible conclusions:

This simulation on collective intelligence shows how a school of fish can collectively find an optimal location

This Autobahn simulation demonstrates how speed variability causes traffic jams.

Static models like causal loop diagrams helped us analyze a system, but a computational model allows us to test specific hypotheses or explore the behavior of a system under different conditions.

This is a crucial aspect of modeling. If we do not formulate hypotheses or predictions that we can test, how can we know if our model accurately represents reality?

Things get even more interesting when we start syncing the model with the real-world concept it represents. Take digital twins for example. All models mimic reality, but digital twins do so by receiving data from physical sensors in the actual system. They constantly mirror the real system’s state.

Formula One is an example of an industry where digital twins have changed the game. Gone are the days when speed and aerodynamic design were enough to set a team apart from the competition. In a sport that has become synonymous with continuous technological improvement, teams have turned to digital twin technology to get a leg up on their competition. Red Bull and Mercedes, the dominant teams of the past decade, have invested heavily in this tech.

The younger generation of drivers, like Max Verstappen, have honed their driving skills using racing simulators, which are a relatively simple form of digital twins. As an anecdotal aside, Max Verstappen won his first F1 race before he even had a driver's license. The digital revolution is changing every game.

The drivers are merely the public interface of a F1 racing team. Behind every driver pair are hundreds of very smart and very competitive people, who all rely on digital twin models to understand how the car behaves on the track. Cars are continuously collecting and sending data, allowing engineers in the team to model the car's performance as well as strategies.

Digital twins allow the teams to test and validate their understanding of the racing system by comparing their predictions of the model to real-world data. If the predictions of the model match the data, the team gains confidence in their understanding of the system. If the predictions of the model do not match the data, they can use this information to refine and improve the model.

Outside Formula One, the digital twin approach is popular in industry, telecom, and manufacturing contexts. Done well, digital twins can optimize the performance of physical assets, and predict maintenance needs or the behavior of these assets under different conditions.

One example closer to home is Skyline Communications, a Flemish company that has created a global product that acts as a digital twin for operations in the ICT media and broadband industry. They may fly well below the radar, but that doesn’t keep them from achieving significant, consistent growth and impressive financials.

While I’m clearly a fan of modeling and computation, there are limits to what it can achieve. I’ll touch on those next week before coming back up the stack.

The best of the rest

While I still write in the traditional way - through coffee, sweat, and tears - more and more people turn to machine learning models to automate the production of content.

Huggingface has some interesting chatGPT prompts you can use for all kinds of creative purposes (have ChatGPT act as a spreadsheet builder, a screenwriter, a debugger, a plagiarism checker, a cybersecurity specialist…)

One engineer created a script that summarizes Arxiv papers and generates podcasts from these papers.

All this raises some philosophical questions. When content becomes abundant, attention becomes scarce. How do we avoid drowning in content sludge? And what moats can companies still claim? Community? Meaning?

Tech debt (or UX debt) is known to creep up on teams. John Cutler illustrates the process in the visual below. When stakeholders ask for a business case to refactor a product, consider also providing a business case for a total rewrite - it is just around the corner:

Speaking of tech debt, Southwest Airlines suffered a holiday meltdown in a classic story of public companies prioritizing short-term stock prices over healthy software foundations. The longer companies ignore these signals, the higher the price they pay. In this case, it was a planning crisis. Next time, maybe ransomware and a total lockout. Ignore it long enough, and the system collapses.

Further reading

“Complex Adaptive Systems: An Introduction to Computational Models of Social Life”: a very readable yet scientific introduction to computational modeling by John Miller and Scott Page

“How Nature Solves Problems Through Computation” - an article in Quanta Magazine featuring data scientist and evolutionary biologist Jessica Flack