S01E10 Navigating complex, adaptive systems

System mapping 101: graphs, maps, and diagrams

The Internet has democratized access to knowledge, but it also provoked an epistemic crisis. The unwashed, but now interconnected masses are challenging experts and institutions. Some consider this a curse - Umberto Eco speaks of “legions of idiots” - while others like Pierre Levy have pointed out the potential for collective intelligence:

What is collective intelligence? It is a form of universally distributed intelligence, constantly enhanced, coordinated in real time, and resulting in the effective mobilization of skills.

My initial premise is based on the notion of a universally distributed intelligence. No one knows everything, everyone knows something, all knowledge resides in humanity.

Leveraging collective intelligence toward action for good has proven harder than leveraging the madness of crowds toward disinformation and chaos. But the same is true of the analog world: it is always harder to build than it is to destroy. Our species is still figuring out the coordinating mechanisms that unlock our ability for networked cooperation.

That is the mission statement of this publication: to explore the mechanisms that can help us level up our powers of coordination. In the next few episodes, I look for ways to better understand the complex, adaptive systems we live in. Levy was right that nobody knows everything, but everyone knows something. How can we tap into this collective intelligence?

The smartest person in the room is the room itself

There is a reason why post-its and workshops are the go-to tools for consultants. Creating maps of systems is a way to harness the collective knowledge, resources, and perspectives of those around us. If we can make this intelligence visible and accessible to more people, we can accelerate our ability to learn and grow together. But how do we make a map of a complex system?

One expert explainer of complex systems is Simon Wardley. He invented Wardley mapping: a technique for strategy mapping that has played a significant role in the digital transformation of the UK’s Government Digital Service. I’ll come back in more detail on Wardley mapping in a later episode, but I want to start this exploration with some fundamental truths that Simon Wardley discovered about mapping:

The entire video is a masterclass in explaining and well worth watching, but I want to highlight two takeaways:

It is easier to argue maps than to argue narratives

A map is a factual way of exploring and explaining a landscape. An alternative approach is to use storytelling to make sense of our surroundings. Storytelling may be a great sales tool, but when it comes to decision-making and alignment, it is problematic.

Narratives are personal things, tightly coupled with people’s belief systems. When narrative lines are drawn in the sand, discussions tend to get messy and political. A disagreement about the lines on a map is generally more constructive.

Graphs vs maps

A graph is a set of nodes connected by lines. These nodes usually represent the components of the system (e.g., users of a social network, or animals in an ecology), and the lines represent the relationships or interactions between those components (e.g., friendships between users, or who eats whom). Graphs are most often used to represent and analyze relationships between different objects or concepts.

Maps, on the other hand, are used to navigate landscape and movement. That means we need to make sure that space has meaning in a visual representation:

As usual, there are no silver bullets; one method isn’t better than the other. Both mechanisms can help us get a better understanding of the systems we live in. Graphs focus on the analysis of relations between components, while maps focus on the position and direction of components.

What about feedback? In the last episodes, I established feedback as a key characteristic of complex, adaptive systems, but neither graphs nor maps focus on the feedback loops between components.

One way to articulate our understanding of the interconnections in a system is through a causal loop diagram. It is possible to link key variables together into sentences that indicate the causal relationship between them. By stringing together several of these loops, we can arrive at a coherent description of the system.

I’ll illustrate this idea with a well-known example of a dynamic, interconnected, and complex problem: the Covid-19 pandemic.

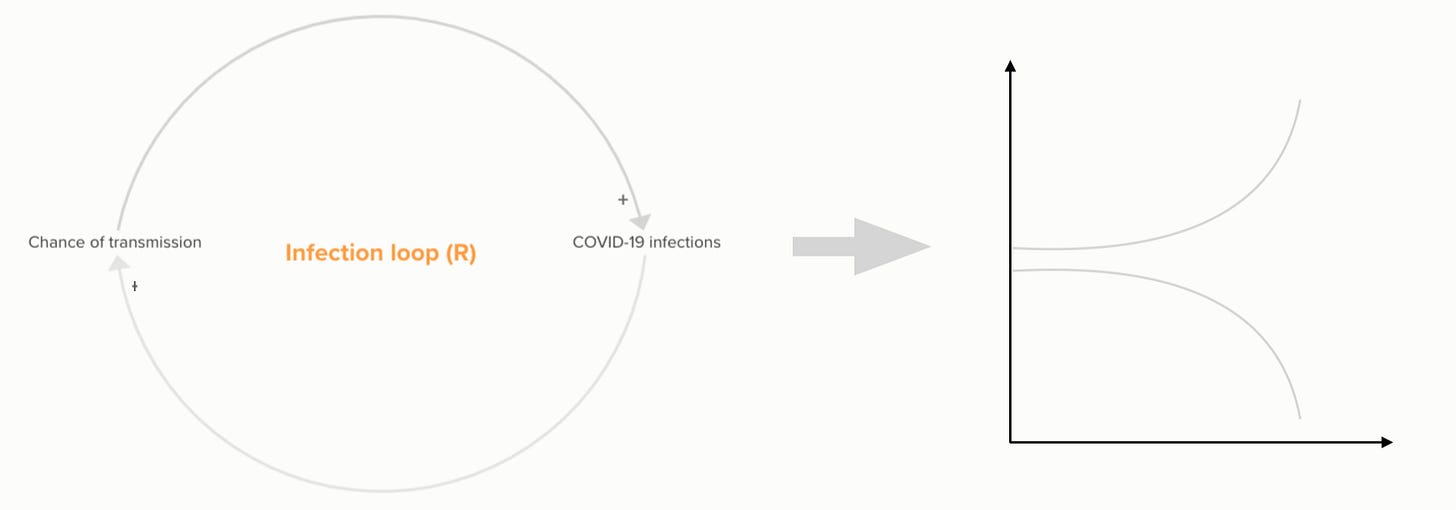

A causal loop diagram attempts to map the most important variables in a given system. In the pandemic case, that might be the number of infections and the chance of viral transmission. These variables impact each other, and together they create a reinforcing feedback process. Whenever we see growth or decline in a system, there is reinforcing feedback at play: a variable continually feeding back upon itself.

Reinforcing loops do not endlessly reinforce their own growth (or collapse). Eventually, they run out of steam. Balancing loops are the yang to the reinforcing yin; they counteract the change happening in a system. They seek goals and push towards equilibrium. Whenever we see goal-oriented behavior in a system, there is balancing feedback involved, as illustrated in the loop I added to the diagram:

It is possible to extend causal loop diagrams as far as our comprehension of the system allows. In the pandemic example, we could add different vaccination scenarios to the model. In that case, relevant variables might be the availability of vaccines, the willingness to get vaccinated, different compliance policies, etc.

At the end of the day, the selection of variables and the framing of their relationships are subjective. In the pandemic example, I included government-issued restrictions as a way to dampen the undesired reinforcing feedback loop. You may disagree and offer an alternative control loop instead. By articulating the expected causal loops, policy discussions become more factual and less entangled.

Policy and strategy are hard because we are looking for causal threads in a haystack of interacting variables. Will lockdowns suppress the virus? Will agile transformation programs result in shareholder value? Causal loop diagrams will not answer these questions for you, but they are likely to improve the rigor of thinking and snuff out biases and assumptions.

If these diagrams look unwieldy, it might help to think of them as functions that describe how the system behaves over time. Reinforcing loops, for example, translate into exponential curves:

When we add the balancing loop, we expect it to ‘flatten the curve’ and act as a brake. We can also call this goal-seeking behavior:

Practitioners of systems thinking and system dynamics learn that similar patterns of behavior show up in a variety of different situations. The underlying structures are always different, but they often result in a handful of characteristic patterns:

Next week I will dive deeper into the systems theory rabbit hole, and I’ll explore the art of modeling complex systems.

Further reading

Knowledge ecologist Christina Bowen

Expert Wardley mapper Hired thought

A kindred spirit I recently discovered: Luke Craven